chapter 18 Power point

advertisement

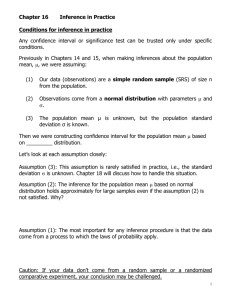

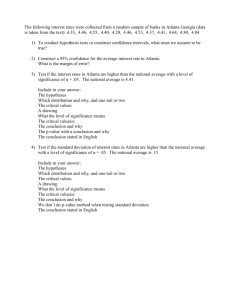

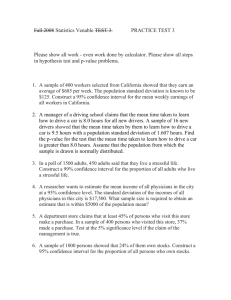

Chapter 18 Sections 1-4 Bell Ringer I CAN: Daily Agenda 1. Bell Ringer 2. Review Bell Ringer 3. Section 18 Any confidence interval or significance test can be trusted only under specific conditions. It’s up to you to understand these conditions and judge whether they fit your problem. With that in mind, let’s look back at the “simple conditions” for the z procedures. The final “simple condition,” that we know the standard deviation σ of the population, is rarely satisfied in practice. The z procedures are therefore of little practical use. Fortunately, it’s easy to remove the “known σ” condition. Chapter 20 shows how. The condition that the size of the population is large compared to the size of the sample is often easy to verify, and when it is not satisfied there are special, advanced methods for inference. The other “simple conditions” (SRS, Normal population) are harder to escape. In fact, they represent the kinds of conditions needed if we are to trust almost any statistical inference. As you plan inference, you should always ask, “Where did the data come from?” and you must often also ask, “What is the shape of the population distribution?” This is the point where knowing mathematical facts gives way to the need for judgment. When you use statistical inference, you are acting as if your data are a random sample or come from a randomized comparative experiment. If your data don’t come from a random sample or a randomized comparative experiment, your conclusions may be challenged. To answer the challenge, you must usually rely on subjectmatter knowledge, not on statistics. It is common to apply statistical inference to data that are not produced by random selection. When you see such a study, ask whether the data can be trusted as a basis for the conclusions of the study. A psychologist is interested in how our visual perception can be fooled by optical illusions. Her subjects are students in Psychology 101 at her university. Most psychologists would agree that it’s safe to treat the students as an SRS of all people with normal vision. There is nothing special about being a student that changes visual perception. A sociologist at the same university uses students in Sociology 101 to examine attitudes toward poor people and antipoverty programs. Students as a group are younger than the adult population as a whole. Even among young people, students as a group come from more prosperous and better-educated homes. Even among students, this university isn’t typical of all campuses. Even on this campus, students in a sociology course may have opinions that are quite different from those of engineering students. The sociologist can’t reasonably act as if these students are a random sample from any interesting population. These examples are typical. One is an actual SRS, two are situations in which common practice is to act as if the sample were an SRS, and in the remaining example procedures that assume an SRS are used for a quick analysis of data from a more complex random sample. There is no simple rule for deciding when you can act as if a sample is an SRS. Pay attention to these cautions: • Practical problems such as nonresponse in samples or dropouts from an experiment can hinder inference even from a well-designed study. The NHANES survey has about an 80% response rate. This is much higher than opinion polls and most other national surveys, so by realistic standards NHANES data are quite trustworthy. (NHANES uses advanced methods to try to correct for nonresponse, but these methods work a lot better when response is high to start with.) • Different methods are needed for different designs. The z procedures aren’t correct for random sampling designs more complex than an SRS. Later chapters give methods for some other designs, but we won’t discuss inference for really complex designs like that used by NHANES. Always be sure that you (or your statistical consultant) know how to carry out the inference your design calls for. • There is no cure for fundamental flaws like voluntary response surveys or uncontrolled experiments. Look back at the bad examples in Chapters 8 and 9 and steel yourself to just ignore data from such studies. 18.1 Rate the Lecture. A professor is interested in how the 500 students in his class will rate today’s lecture. He selects the first 20 students on his class list, reads the names at the beginning of the lecture, and asks them to go online to the course website after class and rate the lecture on a scale of 0 to 5. Which of the following is the most important reason why a confidence interval for the mean rating by all his students based on these data is of little use? Comment briefly on each reason to explain your answer. (a)The number of students selected is small, so the margin of error will be large. (b)Many of the students selected may not be in attendance and/or will not respond. (c)The students selected can’t be considered a random sample from the population of all students in the course. 18.2 Running Red Lights. A survey of licensed drivers inquired about running red lights. One question asked, “Of every ten motorists who run a red light, about how many do you think will be caught?” The mean result for 880 respondents was = 1.92 and the standard deviation was s = 1.83.2 For this large sample, s will be close to the population standard deviation σ, so suppose we know that σ = 1.83. (a)Give a 95% confidence interval for the mean opinion in the population of all licensed drivers. (b)The distribution of responses is skewed to the right rather than Normal. This will not strongly affect the z confidence interval for this sample. Why not? (c)The 880 respondents are an SRS from completed calls among 45,956 calls to randomly chosen residential telephone numbers listed in telephone directories. Only 5029 of the calls were completed. This information gives two reasons to suspect that the sample may not represent all licensed drivers. What are these reasons? 18.3 Sampling Shoppers. A marketing consultant observes 50 consecutive shoppers at a department store the Friday after Thanksgiving, recording how much each shopper spends in the store. Suggest some reasons why it may be risky to act as if 50 consecutive shoppers at this particular time are an SRS of all shoppers at this store. The most important caution about confidence intervals in general is a consequence of the use of a sampling distribution. A sampling distribution shows how a statistic such as varies in repeated random sampling. This variation causes random sampling error because the statistic misses the true parameter by a random amount. No other source of variation or bias in the sample data influences the sampling distribution. So the margin of error in a confidence interval ignores everything except the sample-to-sample variation due to choosing the sample randomly. The margin of error in a confidence interval covers only ____________ ____________ __________. Practical difficulties such as undercoverage and nonresponse are often more serious than random sampling error. The margin of error does not take such difficulties into account. Recall from Chapter 8 that national opinion polls often have response rates less than 50%, and that even small changes in the wording of questions can strongly influence results. In such cases, the announced margin of error is probably unrealistically _____________. And of course there is no way to assign a meaningful margin of error to results from voluntary response or convenience samples, because there is no random selection. Look carefully at the details of a study before you trust a confidence interval. 18.4 What’s Your Weight? A 2013 Gallup Poll asked a national random sample of 477 adult women to state their current weight. The mean weight in the sample was = 157. We will treat these data as an SRS from a Normally distributed population with standard deviation σ = 35. (a)Give a 95% confidence interval for the mean weight of adult women based on these data. (b)Do you trust the interval you computed in part (a) as a 95% confidence interval for the mean weight of all U.S. adult women? Why or why not? 18.5 Good Weather, Good Tips? Example 16.3 (page 381) described an experiment exploring the size of the tip in a particular restaurant when a message indicating that the next day’s weather would be good was written on the bill. You work part-time as a server in a restaurant. You read a newspaper article about the study that reports that with 95% confidence the mean percentage tip from restaurant patrons will be between 21.33 and 23.09 when the server writes a message on the bill stating that the next day’s weather will be good. Can you conclude that if you begin writing a message on patrons’ bills that the next day’s weather will be good, approximately 95% of the days you work your mean percentage tip will be between 21.33 and 23.09? Why or why not? 18.6 Sample Size and Margin of Error. Example 16.1 (page 375) described NHANES data on the body mass index (BMI) of 654 young women. The mean BMI in the sample was = 26.8. We treated these data as an SRS from a Normally distributed population with standard deviation σ = 7.5. (a)Suppose that we had an SRS of just 100 young women. What would be the margin of error for 95% confidence? (b)Find the margins of error for 95% confidence based on SRSs of 400 young women and 1600 young women. (c)Compare the three margins of error. How does increasing the sample size change the margin of error of a confidence interval when the confidence level and population standard deviation remain the same? 18.7 Do You Eat Fast Food? A July 2013 Gallup poll asked, “How often, if ever, do you eat at fast-food restaurants, including drive-thru, take-out, and sitting down in the restaurant?” Of those sampled, 47% indicated they do so at least weekly. Gallup announced the poll’s margin of error for 95% confidence as ±3 percentage points. Which of the following sources of error are included in this margin of error? (a)Gallup dialed landline telephone numbers at random and so missed all people without landline phones, including people whose only phone is a cell phone. (b)Some people whose numbers were chosen never answered the phone in several calls or answered but refused to participate in the poll. (c)There is chance variation in the random selection of telephone numbers. Bell Ringer Significance tests are widely used in most areas of statistical work. - New pharmaceutical products require significant evidence of effectiveness and safety. - Courts inquire about statistical significance in hearing class action discrimination cases. - Marketers want to know whether a new package design will significantly increase sales. - Medical researchers want to know whether a new therapy performs significantly better. In all these uses, statistical significance is valued because it points to an effect that is unlikely to occur simply by chance. Here are some points to keep in mind when you use or interpret significance tests. How small a P is convincing? The purpose of a test of significance is to describe the degree of evidence provided by the sample against the null hypothesis. The P-value does this. But how small a P-value is convincing evidence against the null hypothesis? This depends mainly on two circumstances: - How plausible is H0? If H0 represents an assumption that the people you must convince have believed for years, strong evidence (small P) will be needed to persuade them. - What are the consequences of rejecting H0? If rejecting H0 in favor of Ha means making an expensive changeover from one type of product packaging to another, you need strong evidence that the new packaging will boost sales These criteria are a bit subjective. Different people will often insist on different levels of significance. Giving the Pvalue allows each of us to decide individually if the evidence is sufficiently strong. Because large random samples have small chance variation, very small population effects can be highly significant if the sample is large. Because small random samples have a lot of chance variation, even large population effects can fail to be significant if the sample is small. Statistical significance does not tell us whether an effect is large enough to be important. That is, statistical significance is not the same thing as practical significance. Keep in mind that “statistical significance” means “the sample showed an effect larger than would often occur just by chance.” The extent of chance variation changes with the size of the sample, so the size of the sample does matter. Exercise 18.9 demonstrates in detail how increasing the sample size drives down the P-value. Here is another example. We are testing the hypothesis of no correlation between two variables. With 1000 observations, an observed correlation of only r = 0.08 is significant evidence at the 1% level that the correlation in the population is not zero but positive. The small P-value does not mean that there is a ___________ ____________, only that there is _________ __________ of some association. The true population correlation is probably quite close to the observed sample value, r = 0.08. We might well conclude that for practical purposes we can ignore the association between these variables, even though we are confident (at the 1% level) that the correlation is positive. On the other hand, if we have only 10 observations, a correlation of r = 0.5 is not significantly greater than zero even at the 5% level. Small samples vary so much that a large r is needed if we are to be confident that we aren’t just seeing chance variation at work. So a small sample will often fall short of significance even if the true population correlation is quite large. Beware of multiple analyses Statistical significance ought to mean that you have found an effect that you were looking for. The reasoning behind statistical significance works well if you decide what effect you are seeking, design a study to search for it, and use a test of significance to weigh the evidence you get. In other settings, significance may have little meaning. Might the radiation from cell phones be harmful to users? Many studies have found little or no connection between using cell phones and various illnesses. Here is part of a news account of one study: A hospital study that compared brain cancer patients and a similar group without brain cancer found no statistically significant association between cell phone use and a group of brain cancers known as gliomas. But when 20 types of glioma were considered separately an association was found between phone use and one rare form. Puzzlingly, however, this risk appeared to decrease rather than increase with greater mobile phone use.5 Think for a moment. Suppose that the 20 null hypotheses (no association) for these 20 significance tests are all true. Then each test has a 5% chance of being significant at the 5% level. That’s what = 0.05 means: results this extreme occur 5% of the time just by chance when the null hypothesis is true. Because 5% is 1/20, we expect about 1 of 20 tests to give a significant result just by chance. That’s what the study observed. 18.8 Is It Significant? In the absence of special preparation, SAT Mathematics (SATM) scores in 2013 varied Normally with mean μ = 514 and σ = 118. Fifty students go through a rigorous training program designed to raise their SATM scores by improving their mathematics skills. Either by hand or by using the P-Value of a Test of Significance applet, carry out a test of H0: μ = 514 Ha: μ > 514 (with σ = 118) in each of the following situations: (a)The students’ average score is = 541. Is this result significant at the 5% level? (b)The average score is = 542. Is this result significant at the 5% level? The difference between the two outcomes in parts (a) and (b) is of no practical importance. Beware attempts to treat = 0.05 as sacred. 18.9 Detecting Acid Rain. Emissions of sulfur dioxide by industry set off chemical changes in the atmosphere that result in “acid rain.” The acidity of liquids is measured by pH on a scale of 0 to 14. Distilled water has pH 7.0, and lower pH values indicate acidity. Normal rain is somewhat acidic, so acid rain is sometimes defined as rainfall with a pH below 5.0. Suppose that pH measurements of rainfall on different days in a Canadian forest follow a Normal distribution with standard deviation σ = 0.6. A sample of n days finds that the mean pH is = 4.8. Is this good evidence that the mean pH μ for all rainy days is less than 5.0? The answer depends on the size of the sample. Either by hand or using the P-Value of a Test of Significance applet, carry out four tests of H0: μ = 5.0 Ha: μ < 5.0 Use σ = 0.6 and = 4.8 in all four tests. But use four different sample sizes: n = 9, n = 16, n = 36, and n = 64. (a)What are the P-values for the four tests? The P-value of the same result = 4.8 gets smaller (more significant) as the sample size increases. (b)For each test, sketch the Normal curve for the sampling distribution of when H0 is true. This curve has mean 5.0 and standard deviation 0.6/. Mark the observed = 4.8 on each curve. (If you use the applet, you can just copy the curves displayed by the applet.) The same result = 4.8 gets more extreme on the sampling distribution as the sample size increases. 18.10 Confidence Intervals Help. Give a 95% confidence interval for the mean pH μ for each sample size in Exercise 18.9. The intervals, unlike the P-values, give a clear picture of what mean pH values are plausible for each sample. 18.11 Searching for ESP. A researcher looking for evidence of extrasensory perception (ESP) tests 1000 subjects. Nine of these subjects do significantly better (P < 0.01) than random guessing. (a)Nine seems like a lot of people, but you can’t conclude that these nine people have ESP. Why not? (b)What should the researcher now do to test whether any of these nine subjects have ESP? A wise user of statistics never plans a sample or an experiment without at the same time planning the inference. The number of observations is a critical part of planning a study. Larger samples give smaller margins of error in confidence intervals and make significance tests better able to detect effects in the population. But taking observations costs both time and money. How many observations are enough? We will look at this question first for confidence intervals and then for tests. Planning a confidence interval is much simpler than planning a test. It is also more useful, because estimation is generally more informative than testing. The section on planning tests is therefore optional. You can arrange to have both high confidence and a small margin of error by taking enough observations. The margin of error of the z confidence interval for the mean of a Normally distributed population is m = z*σ/. To obtain a desired margin of error m, put in the value of z* for your desired confidence level, and solve for the sample size n. Here is the result: 18.12 Body Mass Index of Young Women. Example 16.1 (page 375) assumed that the body mass index (BMI) of all American young women follows a Normal distribution with standard deviation σ = 7.5. How large a sample would be needed to estimate the mean BMI μ in this population to within ± 1 with 95% confidence? 18.13 Number Skills of Eighth Graders. Suppose that scores on the mathematics part of the National Assessment of Educational Progress (NAEP) test for eighthgrade students follow a Normal distribution with standard deviation σ = 125. You want to estimate the mean score within ± 10 with 90% confidence. How large an SRS of scores must you choose?