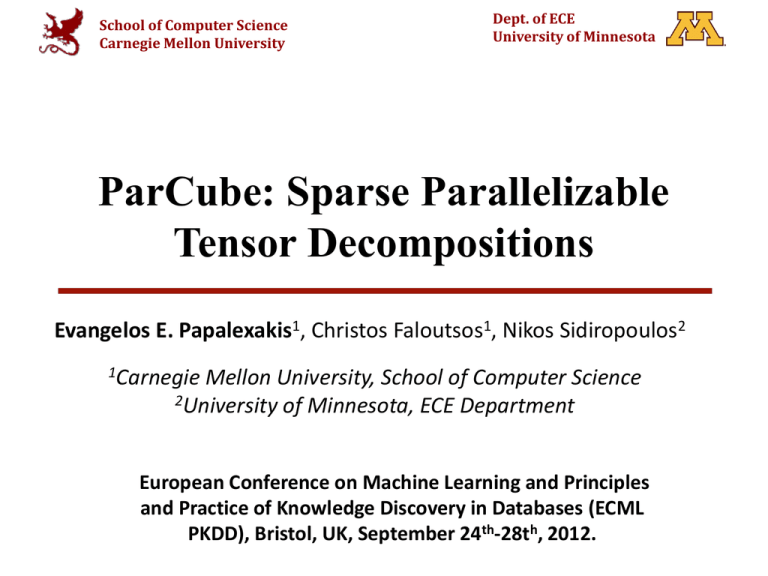

Evangelos Papalexakis (CMU) * ECML

advertisement

School of Computer Science Carnegie Mellon University Dept. of ECE University of Minnesota ParCube: Sparse Parallelizable Tensor Decompositions Evangelos E. Papalexakis1, Christos Faloutsos1, Nikos Sidiropoulos2 1Carnegie Mellon University, School of Computer Science 2University of Minnesota, ECE Department European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), Bristol, UK, September 24th-28th, 2012. Outline • Introduction Problem Statement Method Experiments Conclusions Evangelos Papalexakis (CMU) – ECML-PKDD 2012 2 Introduction • Facebook has ~800 Million users Evolves over time How do we spot interesting patterns & anomalies in this very large network? Evangelos Papalexakis (CMU) – ECML-PKDD 2012 3 Introduction • Suppose we have Knowledge Base data E.g. Read the Web Project at CMU Subject – verb – object triplets, mined from the web Many gigabytes or terabytes of data! How do we find potential new synonyms to a word using this knowledge base? Evangelos Papalexakis (CMU) – ECML-PKDD 2012 4 Introduction to Tensors • Tensors are multidimensional generalizations of matrices Previous problems can be formulated as tensors! Time-evolving graphs/social networks, Multi-aspect data (e.g. subject, object, verb) • Focus on 3-way tensors Can be viewed as Data cubes Indexed by 3 variables (IxJxK) subject object Evangelos Papalexakis (CMU) – ECML-PKDD 2012 5 Introduction to Tensors • PARAFAC decomposition Decompose a tensor into sum of outer products/rank 1 tensors Each rank 1 tensor is a different group/”concept” “Similar” to the Singular Value Decomposition in the matrix case Store the factor vectors ai, bi, ci as columns of matrices A, B, C subject object “leaders/CEOs” “products” Evangelos Papalexakis (CMU) – ECML-PKDD 2012 6 Outline Introduction • Problem Statement Method Experiments Conclusions Evangelos Papalexakis (CMU) – ECML-PKDD 2012 7 Why not PARAFAC? • Today’s datasets are in the orders of terabytes e.g. Facebook has ~ 800 Million users! • Explosive complexity/run time for truly large datasets! • Also, data is very sparse We need the decomposition factors to be sparse Better interpretability / less noise Can do multi-way soft co-clustering this way! PARAFAC is dense! Evangelos Papalexakis (CMU) – ECML-PKDD 2012 8 Problem Statement • Wish-list: Significantly drop the dimensionality Ideally 1 or more orders of magnitude Parallelize the computation Ideally split the problem into independent parts and run in parallel Yield sparse factors Don’t loose much in the process Evangelos Papalexakis (CMU) – ECML-PKDD 2012 9 Previous work • A.H. Phan et al. Block decomposition for very large-scale nonnegative tensor factorization • • Q. Zhang et al. A parallel nonnegative tensor factorization algorithm for mining global climate data. D. Nion et al. Adaptive algorithms to track the parafac decomposition of a thirdorder tensor & J. Sun et al. Beyond streams and graphs: dynamic tensor analysis • Tensor is a stream, both methods seek to track the decomposition C.E. Tsourakakis Mach: Fast randomized tensor decompositions & J. Sun et al. Multivis:Content- based social network exploration through multi-way visual analysis • Partition & merge parallel algorithm for NN PARAFAC No sparsity Sampling based TUCKER models. E.E. Papalexakis et al. Co-clustering as multilinear decomposition with sparse latent factors. Sparse PARAFAC algorithm applied to co-clustering Evangelos Papalexakis (CMU) – ECML-PKDD 2012 10 Our proposal • We introduce PARCUBE and set the following goals: • Goal 1: Fast Scalable & parallelizable • Goal 2: Sparse Ability to yield sparse latent factors and a sparse tensor approximation • Goal 3: Accurate provable correctness in merging partial results, under appropriate conditions Evangelos Papalexakis (CMU) – ECML-PKDD 2012 11 Outline Introduction Problem Statement • Method Experiments Conclusions Evangelos Papalexakis (CMU) – ECML-PKDD 2012 12 PARCUBE: The big picture Break up tensor into small pieces using sampling G1 %! " %! " ! $%!" $! " G2 #! " &" !" %#" ! $%#" G1 $ ! " Match columns and $#" ##" #! " distribute non-zero values to appropriate indices in original (non-sampled) space Fit dense PARAFAC decomposition on small mple of rank-1 Par af acsampled using tensors Par Cube (A lgorit hm 3). T he procedure t he following: Creat e r independent samples of X , using A lgorit hm 1. Run c- A LS algorit hm for K = 1 and obt ain r t riplet s of vect ors, corresponding • Sampling selects small portion of indices G2 ing omponent of X . A s a final st ep, combine t hose r t riplet s, by dist ribut PARAFAC ai bied ci will sparse t o t he original•sized t ripletvectors s, as indicat in Abe lgorit hm 3.by construction Evangelos Papalexakis (CMU) – ECML-PKDD 2012 13 The PARCUBE method • Key ideas: Use biased sampling to sample rows, cols & fibers Sampling weight During sampling, always keep a common portion of indices across samples For each smaller tensor, do the PARAFAC decomposition. Need to specify 2 parameters: Sampling rate: s Initial dimensions I, J, K I/s, J/s, K/s Number of repetitions / different sampled tensors: r Evangelos Papalexakis (CMU) – ECML-PKDD 2012 14 Putting the pieces together • Say we have matrices As from each sample • Possibly have re-ordering of factors • Each matrix corresponds to different sampled index set of the original index space • All factors share the “upper” part (by construction) … G3 Proposition: Under mild conditions, the algorithm will stitch components correctly & output what exact PARAFAC would Evangelos Papalexakis (CMU) – ECML-PKDD 2012 15 Outline Introduction Problem Statement Method • Experiments Conclusions Evangelos Papalexakis (CMU) – ECML-PKDD 2012 16 Experiments • We use the Tensor Toolbox for Matlab PARAFAC for baseline and core implementation • Evaluation of performance Algorithm correctness Execution speedup Factor sparsity Evangelos Papalexakis (CMU) – ECML-PKDD 2012 17 Experiments – Correctness for multiple repetitions • Relative cost = PARCUBE approximation cost / PARAFAC approximation cost • The more samples we get, the closer we are to exact PARAFAC • Experimental validation of our theoretical result. Evangelos Papalexakis (CMU) – ECML-PKDD 2012 18 Experiments - Correctness & Speedup for 1 repetition • Relative cost = PARCUBE approximation cost / PARAFAC approximation cost • Speedup = PARAFAC execution time / PARCUBE execution time • Extrapolation to parallel execution for 4 repetitions yields 14.2x speedup (and improves accuracy) Evangelos Papalexakis (CMU) – ECML-PKDD 2012 19 Experiments – Correctness & Sparsity Same as PARAFAC • Output size = NNZ(A) + NNZ(B) + NNZ(C) • 90% sparser than PARAFAC while maintaining the same approximation error Evangelos Papalexakis (CMU) – ECML-PKDD 2012 20 Experiments • Knowledge Discovery ENRON email/social network 186×186×44 Network traffic data (LBNL) 65170 × 65170 × 65327 FACEBOOK Wall posts 63891 × 63890 × 1847 Knowledge Base data (Never Ending Language Learner – NELL) 14545 × 14545 × 28818 Evangelos Papalexakis (CMU) – ECML-PKDD 2012 21 Discovery - ENRON • Who-emailed-whom data from the ENRON email dataset. Spans 44 months 184×184×44 tensor We picked s = 2, r = 4 • We were able to identify social cliques and spot spikes that correspond to actual important events in the company’s timeline Evangelos Papalexakis (CMU) – ECML-PKDD 2012 22 Discovery – LBNL Network Data 1 src 1 dst • Network traffic data of form (src IP, dst IP, port #) 65170 × 65170 × 65327 tensor We pick s = 5, r = 10 • We were able to identify a possible Port Scanning Attack Evangelos Papalexakis (CMU) – ECML-PKDD 2012 23 Discovery – FACEBOOK Wall posts 1 Wall 1 day • Small portion of Facebook’s users 63890 users for 1847 days Picked s = 100, r = 10 • Data in the form (Wall owner, poster, timestamp) • Downloaded from http://socialnetworks.mpi-sws.org/datawosn2009.html • We were able to identify a birthday-like event. Evangelos Papalexakis (CMU) – ECML-PKDD 2012 24 Discovery - NELL • Knowledge base data • Taken from the Read The Web project at CMU http://rtw.ml.cmu.edu/rtw/ Special thanks to Tom Mitchell for the data. • Noun phrase x Context x Noun phrase triplets e.g. ‘Obama’ – ‘is’ – ‘the president of the United States’ • Discover words that may be used in the same context • We picked s = 500, r = 10. Evangelos Papalexakis (CMU) – ECML-PKDD 2012 25 Outline Introduction Problem Statement Method Experiments • Conclusions Evangelos Papalexakis (CMU) – ECML-PKDD 2012 26 Conclusions Goal 1: Fast Scalable & parallelizable Goal 2: Sparse Ability to yield sparse latent factors and a sparse tensor approximation Goal 3: Accurate provable correctness in merging partial results, under appropriate conditions Experiments that also demonstrate that • Enables processing of tensors that don’t fit in memory • Interesting findings in diverse Knowledge Discovery settings Evangelos Papalexakis (CMU) – ECML-PKDD 2012 27 The End Evangelos E. Papalexakis Email: epapalex@cs.cmu.edu Web: http://www.cs.cmu.edu/~epapalex Christos Faloutsos Email: christos@cs.cmu.edu Web: http://www.cs.cmu.edu/~christos Nicholas Sidiropoulos Email: nikos@umn.edu Web: http://www.ece.umn.edu/users/nikos/ Evangelos Papalexakis (CMU) - ASONAM 2012 28